Imagine hiring a brilliant new intern. They are incredibly fast, have access to a world of information, and can generate reports, emails, and ideas in seconds. However, you soon realise they sometimes get facts wrong, mix up details, or confidently present information that is completely fabricated. You wouldn’t fire them, but you certainly wouldn’t let them make important decisions without your final approval. This is the reality of using Artificial Intelligence today. While AI is transforming our work and study lives here in Malaysia, recent findings have sent a clear message: we must approach it with a healthy dose of caution. This article explores the double-edged sword of AI, guiding you on how to use it smartly without falling into its traps.

The AI Wave and Its Undercurrents

Artificial Intelligence is no longer a concept from science fiction; it’s a daily tool for many Malaysians. Students use it to simplify complex topics, marketers use it to craft compelling copy, and developers use it to write code more efficiently. The promise is immense: increased productivity, new creative avenues, and access to instant information. This wave of innovation is exciting and holds the potential to push our industries forward. However, beneath the surface of this powerful wave lies a strong undercurrent of unreliability. A growing body of evidence suggests that the very tools we are beginning to rely on can also be sources of significant error and misinformation.

The Reliability Challenge: When AI Gets It Wrong

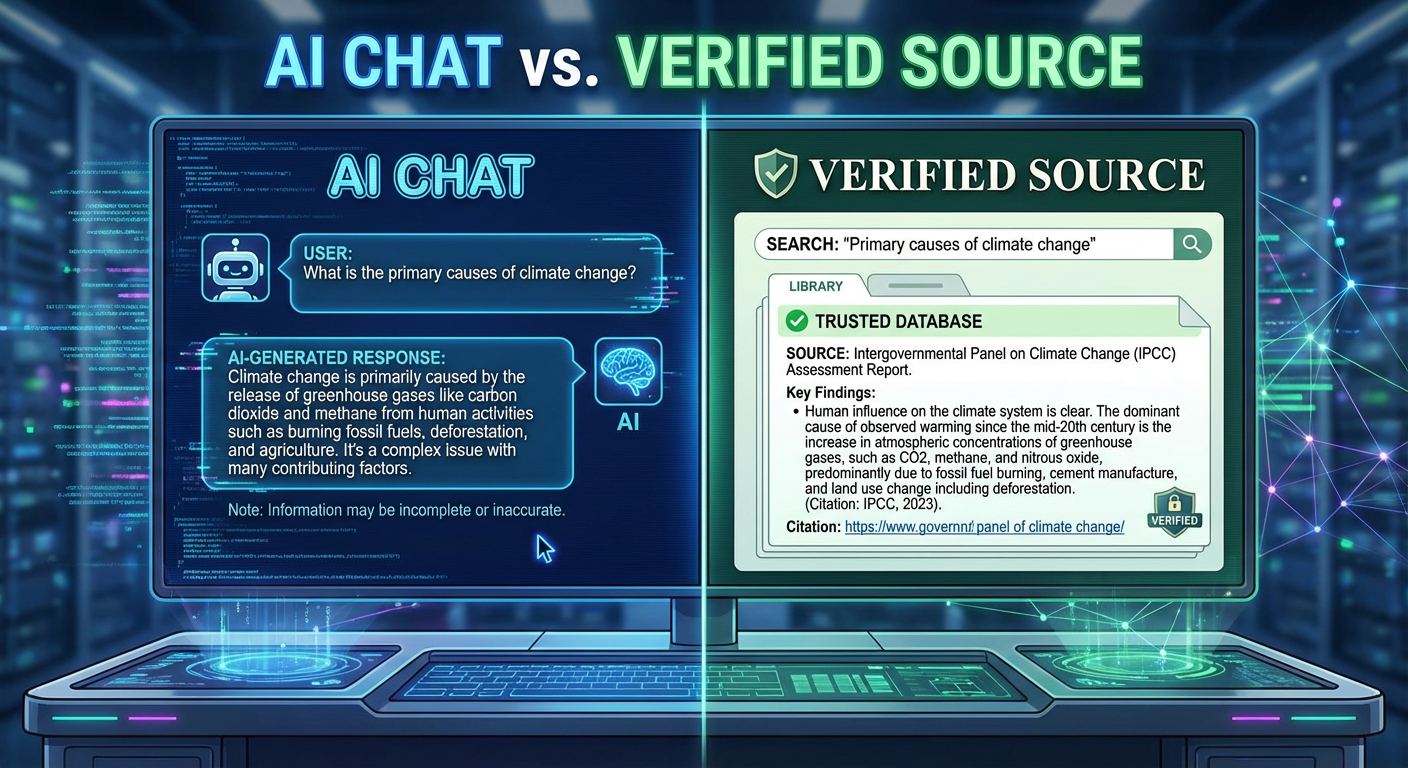

The core issue isn’t whether AI is useful, but whether it is trustworthy. A recent comprehensive study that tested several popular AI chatbots found alarming accuracy problems. These systems, designed to provide correct answers, were often found to generate plausible but incorrect information. For a student writing a thesis or a professional preparing a business proposal in Kuala Lumpur, this is a serious risk. Relying on a flawed AI-generated fact could undermine an entire project. These findings highlight the very real AI limitations in Malaysia, reminding us that these tools lack true understanding and context. They are pattern-matching machines, not thinking beings, and without careful oversight, they can lead us astray.

Your New Co-Pilot, Not the Pilot

The most effective way to approach AI is to reframe its role. Think of it not as an infallible oracle that holds all the answers, but as a sophisticated co-pilot or assistant. A pilot uses autopilot to handle routine tasks, but they always remain in command, ready to take over during turbulence or for critical manoeuvres like landing. Similarly, we should use AI to assist with brainstorming, drafting initial ideas, or summarising long documents. The final judgment, fact-checking, and critical decisions must remain firmly in human hands. This mindset is the foundation of responsible AI use in Malaysia, ensuring that technology serves us, not the other way around.

Developing a Critical Eye for AI

So, how do we practise this a critical approach? It begins with questioning everything the AI produces, especially when it involves data, dates, or important claims. Here are a few practical steps:

- Verify, Then Trust: Always cross-reference AI-generated information with reliable sources. For academic work, use established journals; for business data, consult official reports.

- Use It as a Starting Point: Treat AI output as a first draft. It can help you overcome writer’s block or structure your thoughts, but the refinement, tone, and factual accuracy are your responsibility.

- Understand Its Blind Spots: AI does not have personal experiences, cultural nuances, or emotional intelligence. It cannot understand the subtle context of a Malaysian market trend or the appropriate tone for a sensitive client email without precise human guidance.

Smart Adoption for a Smarter Malaysia

Embracing the future means learning to work with AI, not just using it. For professionals and students across Malaysia, the goal should be to become savvy AI users who understand its power and its pitfalls. Acknowledging the AI limitations in Malaysia is not about rejecting the technology; it’s about engaging with it more intelligently. By developing habits of verification and critical thinking, we turn a potential liability into a powerful asset. Promoting responsible AI use in Malaysia will be key to ensuring our workforce can leverage these tools for genuine innovation and growth, staying ahead of the curve without sacrificing quality or integrity.

In conclusion, the message for all Malaysian users is clear: embrace AI with enthusiasm but also with your eyes wide open. The recent studies on AI reliability are not a stop sign, but a set of important instructions for how to navigate this new technology safely. By treating AI as a capable but fallible assistant—a co-pilot that needs a human commander—we can harness its incredible potential while protecting ourselves from the risks of misinformation. The future isn’t about choosing between human intelligence and artificial intelligence; it’s about creating a powerful partnership between the two. Developing this skill of critical engagement is what will ultimately separate the successful AI users from those who are led astray.

0 Comments